Enterprise AI roadmap: from pilot to production

Enterprise AI roadmap: from pilot to production — A 5-stage guide to MLOps, data governance and KPIs that cut model time-to-production by 40% to scale pilots.

Introduction

Moving from a successful AI pilot to reliable production deployment is one of the most common enterprise challenges. Many pilots demonstrate technical feasibility but fail to scale due to organizational misalignment, data and infrastructure gaps, inadequate MLOps, or unclear ROI metrics. This article provides a practical, step-by-step Enterprise AI roadmap: from pilot to production, intended for business leaders, product managers, and technical leads who must translate experimentation into sustained value.

We will cover prioritization, governance, MLOps, metrics, team structure, and change management. The recommendations are grounded in best practices observed across industries and synthesized for decision makers who need a repeatable process to move AI programs into production safely and at scale (see industry references: McKinsey, Gartner).

Why enterprises need a formal AI roadmap

Enterprises benefit from a formal roadmap because AI initiatives require cross-functional coordination across data, engineering, compliance, and business units. A structured roadmap aligns stakeholders on use-case selection, resource allocation, and risk management while creating clear decision points for scaling. Without a roadmap, pilots risk becoming isolated proofs-of-concept that do not integrate with operations, leading to wasted investment and missed opportunities for automation, cost reduction, or revenue growth.

Key challenges when moving from pilot to production

Technical challenges

Technical obstacles frequently derail production efforts: inconsistent data pipelines, lack of reproducible model training environments, insufficient model monitoring, and poor integration with downstream systems. Address these by standardizing data contracts, implementing version control for datasets and models, and automating CI/CD for models (MLOps). Failure to do so often results in brittle deployments that degrade or break when exposed to production scale or real-world data drift.

Organizational challenges

Organizational friction—unclear ownership, inadequate executive sponsorship, and misaligned KPIs—can block adoption. Business stakeholders must own outcomes, while data science and engineering own delivery. Establishing cross-functional teams and a steering committee helps prioritize high-value use cases and ensures resources follow business impact, not just technical curiosity.

Roadmap: phases and best practices

A pragmatic Enterprise AI roadmap: from pilot to production typically follows these phases: discovery, pilot, production readiness, scale, and continuous optimization. Use the checklist below to make launch decisions at each gate.

-

Phase 1 — Discovery and prioritization

Identify and prioritize use cases based on value (revenue uplift or cost reduction), feasibility (data and technical readiness), and regulatory risk. Use a scoring model to rank candidates. Typical activities: stakeholder interviews, data inventory, and preliminary ROI estimates. Decisions from this phase determine where to invest limited resources.

-

Phase 2 — Pilot execution

Run focused pilots to validate assumptions and quantify impact. Keep pilots time-boxed and avoid scope creep. Deliverables: a validated prototype, defined success metrics, and a deployment plan. Include a small production-like dataset and realistic performance targets to reduce surprises later.

-

Phase 3 — Production readiness

Implement robust pipelines, monitoring, and deployment automation. Prepare runbooks and SLAs. Key tasks include building scalable data ingestion, creating model packaging and containerization, establishing CI/CD for models, and implementing observability for data quality and model performance.

-

Phase 4 — Scaling and operationalization

After initial production launch, expand scope by adding more data sources, regional deployments, or additional models. Focus on reuse: standardized pipelines, shared model registries, and platform services reduce marginal cost of new deployments. Address governance, security, and compliance at scale.

-

Phase 5 — Continuous optimization

Operationalize continuous monitoring, retraining, and periodic model reviews. Use A/B testing and champion-challenger setups to iterate. Establish a cadence for performance reviews, revalidation, and business outcome alignment.

Best practices across these phases include: establishing measurable KPIs before the pilot, automating repeatable tasks, and embedding subject-matter experts in delivery teams. Document assumptions, failure modes, and decision gates so that the organization learns and scales efficiently.

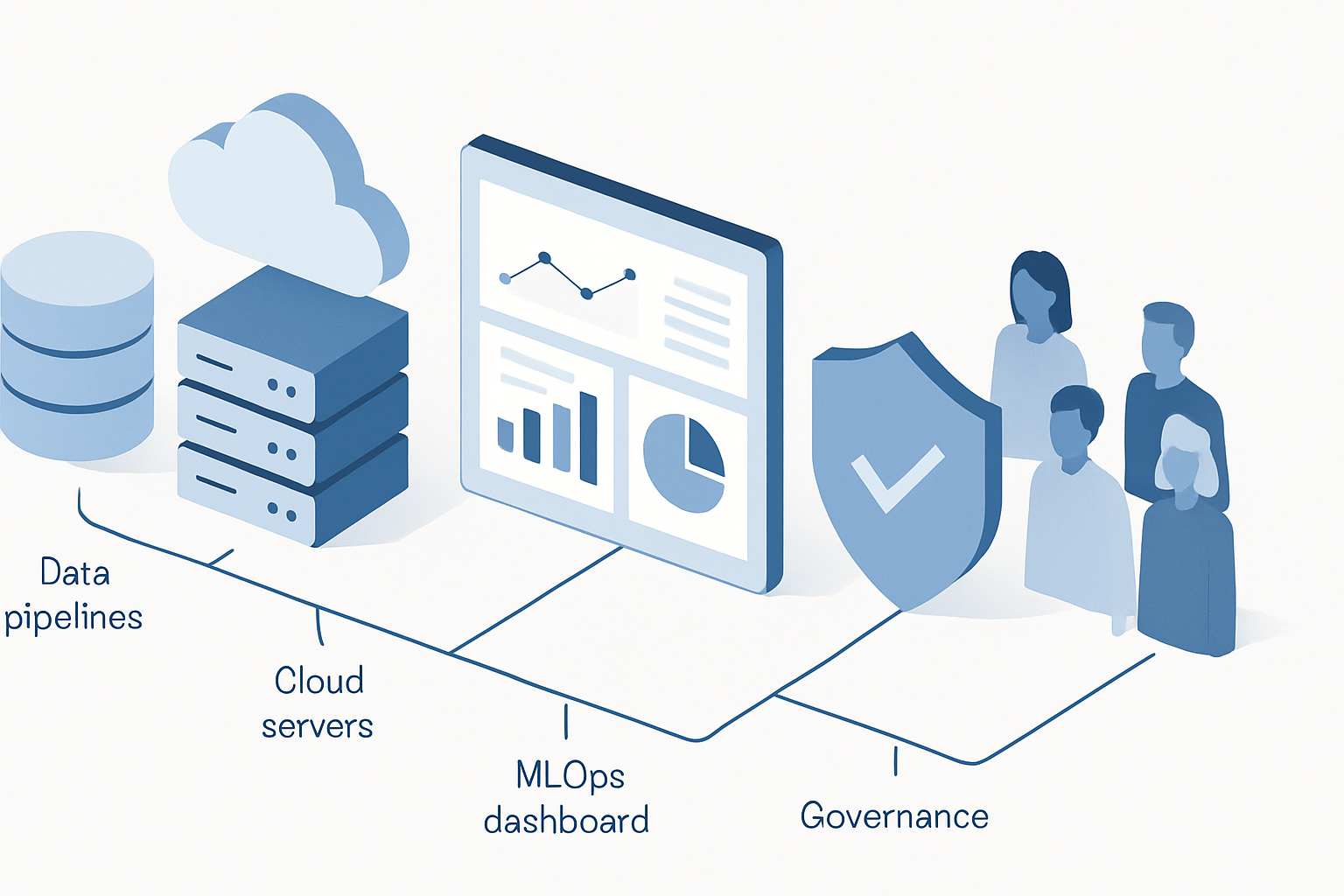

Operational and governance considerations (MLOps, data, compliance)

Production AI is as much an operational challenge as it is a modeling one. MLOps practices—model versioning, automated testing, reproducible pipelines, and deployment orchestration—form the backbone of reliable production systems. Data governance is equally important: define data lineage, access controls, and data quality thresholds. For regulated industries, incorporate explainability, audit trails, and model risk assessments into the release process to ensure compliance and reduce legal exposure (industry frameworks: ISO/IEC, internal audit guidance).

Organize governance as follows: - Steering committee for strategy and prioritization - Platform team for shared services and standardization - Product teams for end-to-end delivery and business outcomes This structure promotes accountability while enabling centralized efficiency.

Technical controls should include automated tests for data schema changes, model performance gates, and canary deployments to limit blast radius. Operational processes must include incident response, rollback procedures, and periodic model validation schedules.

Key Takeaways

- Start with prioritized use cases that combine business value with data readiness.

- Run short, measurable pilots with business-backed success criteria.

- Invest in MLOps and data governance before scaling to reduce time-to-production.

- Formalize ownership and KPIs: business owns outcomes; platform enables delivery.

- Measure continuously and iterate via controlled experiments and monitoring.

Frequently Asked Questions

How long does it take to move an AI project from pilot to production?

Timelines vary: simple pilots with clean data and clear integration points can move to production in 3–6 months, while complex systems with multiple data sources and compliance requirements may take 9–18 months. Timeline depends on data maturity, organizational alignment, and readiness of infrastructure and MLOps practices.

What are the minimum team roles required for production AI?

A minimal production AI team typically includes: a business sponsor, a product manager, data engineers, ML engineers or MLOps engineers, data scientists, and a platform engineer or architect. For regulated environments, add compliance and security roles. Cross-functional collaboration is essential to ensure the model meets business needs and operational constraints.

How do you measure success when transitioning to production?

Measure both technical and business KPIs. Technical KPIs include latency, throughput, model accuracy and drift, and availability. Business KPIs tie to revenue, cost savings, customer experience metrics, or process efficiency. Use guardrail metrics (bias checks, explainability indicators) and ROI calculations to validate ongoing value.

When should an organization invest in MLOps?

Invest in MLOps before or during the first production deployment. Lightweight practices can start earlier—version control for code and datasets, reproducible environments, and basic CI pipelines—but scalable MLOps capabilities (model registries, automated retraining pipelines, monitoring) are necessary to sustain multiple production models and reduce operational risk.

How do you handle model governance and regulatory compliance?

Adopt a framework that documents model purpose, data lineage, testing evidence, and decision rationale. Maintain audit logs, model cards, and performance reports. For high-risk applications, incorporate external audits and explainability techniques. Governance should be a continuous process, not a one-time checklist.

You Deserve an Executive Assistant